3PAR – does CPG setsize to disk-count ratio matter?

In a 3PAR system it is best practice that setsize defined in CPG is a divisor of the number of disks in the CPG. The setsize defines basically the size of a sub-RAID within the CPG. For example a setsize of 8 in a RAID 5 means a sub-RAID uses 7 data and one parity disk.

I did a few tests using an array of 8 disks. I created 4 CPGs, using RAID 5 in all of them but different setsizes of 3, 4, 6 and 8. To get more transparent results, I used 100 GB of fully provisioned virtual volumes (VV).

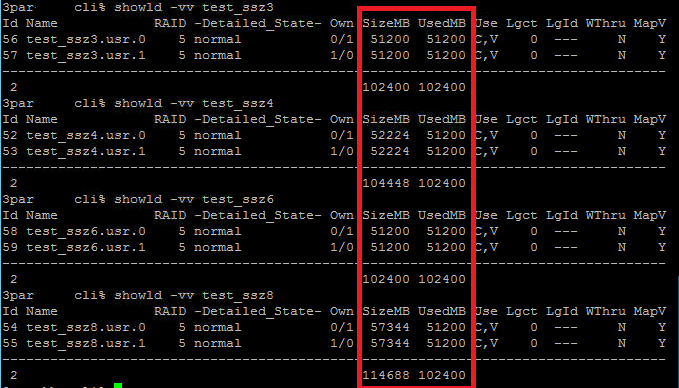

When a 3PAR VV is created, logical disks (LD) are created. To spread data across controller nodes, disks and ports at least one LD for each controller is created as you can see in the screenshot. To show LDs for a VV use command showld -vv VV_name

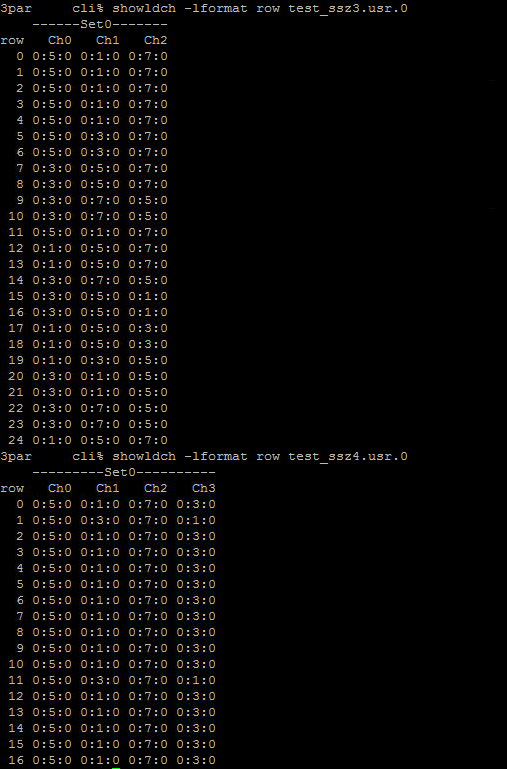

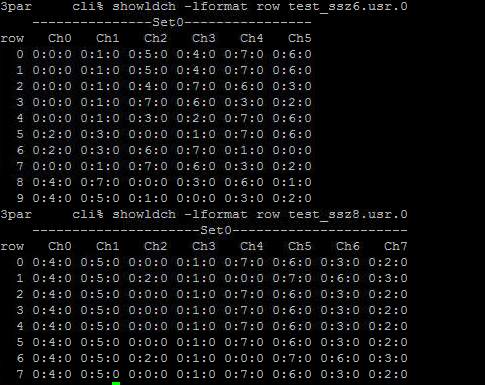

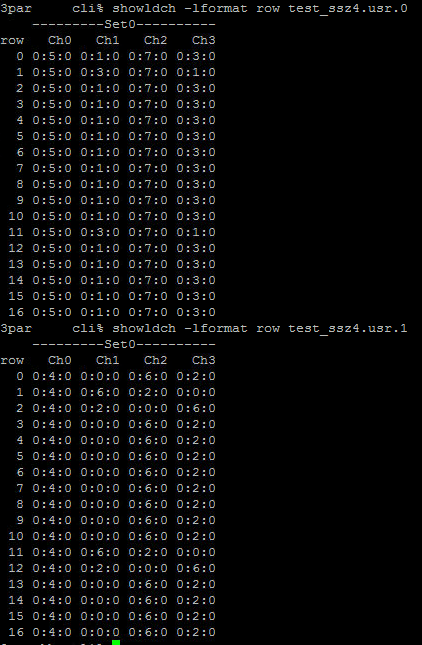

You can see the owner node of each LD, the size of the LD and how much is used. Because each VV has 100g in size, each LD claims the half. The size is different for each VV. This is a result of the setsize as you can see shortly. In a 3PAR system RAID is implemented on chunklet-level. LDs are based on this level. To show all chunklets used by a LD, run command showldch LD_name. To show sub-RAID-sets of an LD, run: showldch -lformat row LD_name. Here are sub-RAIDs of one LD of each test-VV.

In column row, sub-RAIDs are listed. Columns Ch0 – Chn show used physical disk (PD). So, why depends the size of a LD on the setsize of CPG/VV? For LD test_ssz8.usr.0 we saw a size of 57344MB. When we look at the used chunklets for this LD, we see 8 rows and 8 chunklets for each row. Because we use RAID 5, one chunklet per row is used for parity. As a result we get: 8 (rows) * 7 (data-chunklets) *1024 = 57344.

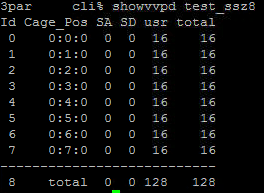

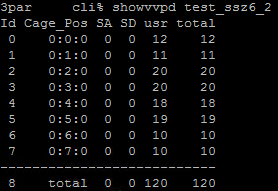

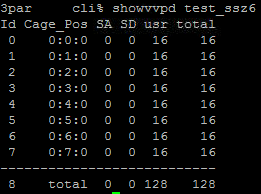

We have seen which sub-RAID-set of which LD uses which PD. To just show used chunklets for a VV, run: showvvpd VV_name. Lets first look at VV test_ssz8 that fits perfectly to 8 disks.

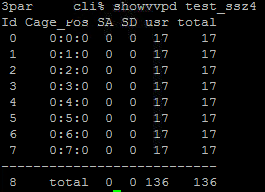

We see 16 chunklets on each disk. Perfectly distributed. Why 128 in sum? Because we saw 2 LDs for this VV, each uses 8*8 chunklets (including RAID-overhead) –> 2*8*8=128. What about VV test_ssz4?

136 = 2 * 17 (rows) * 4 (chunklets). In the next screenshot you can see used chunklets of both LDs to see how LDs spread across all disks.

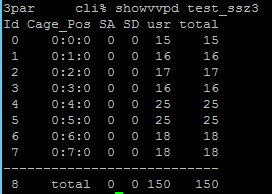

Lets look at VV test_ssz3:

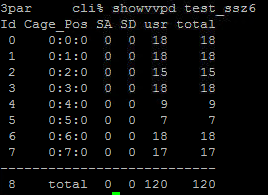

Not very well distributed! We can see a range from 15 to 25 chunklets per PD. And for VV test_ssz6?

Even worse. Range from 7 to 18 chunklets per PD. But is every VV created using a specific setsize equally? So does it use the same amount of LDs on the same PDs?

We see, distribution is not good too, but totally different!

Fortunately such situation is quite easy to fix. All you have to do is to change setsize in CPG settings and tune the whole system or just CPG. Command to tune CPG: tunesys -cpg CPG_name. After setting setsize to 8 in CPG and tune it, distribution is equally.

IMHO

Setsize to disk-count ratio matters! It is responsible for sub-RAID-set creation. When you plan to create just one or two VV on the system, you should take care, setsize divides disk-count. Otherwise the IO-distribution on your disks can be unequally. But the more VVs you run, the smaller the impact will be. I have seen many systems with not fitting setsize – sometimes you cannot set a setsize that fits to disk-count; for example: 38 disks. Most of them have equal distributed IOs. Just a few systems shows no good IO distribution.