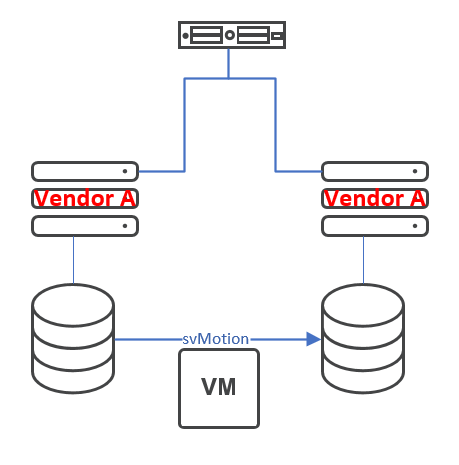

Storage vMotion between arrays of same vendor

Recently I had to investigate an interesting behavior of ESXi hosts during Storage vMotion between two arrays of the same vendor – in this case HDS (G600 –> G700/F700). In this post I list observed symptoms and a very simple way to boost performance in this situation.

Symptoms

During Storage vMotion processes these symptoms can be seen:

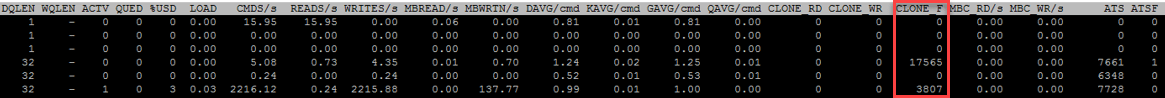

- VAAI Clone error in

esxtop

[to show VAAI counter inesxtop: press ‘u’ (to show devices), ‘f’ (to select counter), option ‘O’ must be selected]

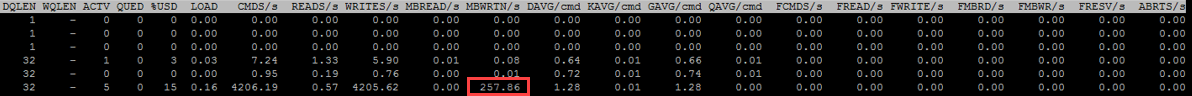

As you can see, all VAAI metrics are zero, except Clone_F which is the error counter for VAAI primitive XCopy. Furthermore Clone_F counts up continuously. - IO errors in

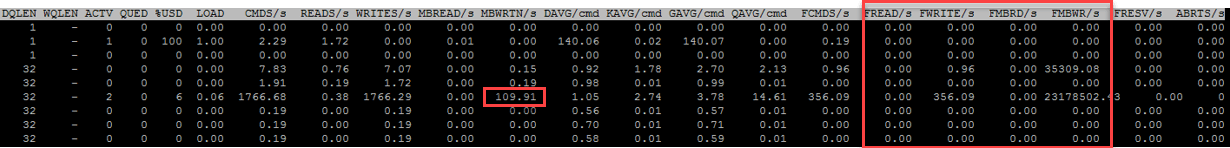

esxtop

[to show error counter inesxtop: press ‘u’ (to show devices), ‘f’ (to select counter), option ‘L’ must be selected]

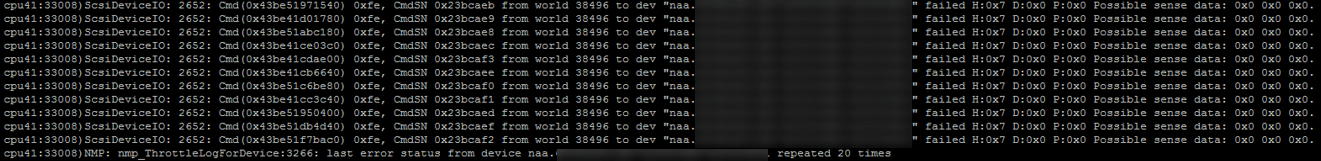

There are not just VAAI errors, also very high write failures (per second) are shown. - Tons of SCSI sense codes in

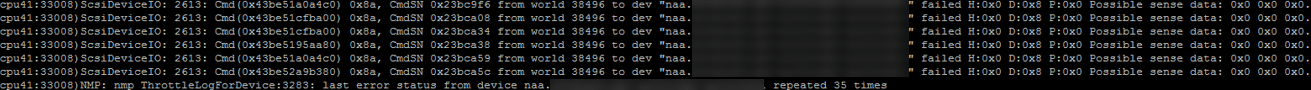

vmkernel.log

There are a massive amount of SCSI sense codes in log. These codes mean basically that IO has to be repeated because of a problem.H:0x0 D:0x8 P:0x0

H:0x7 D:0x0 P:0x0

- Path errors in vCenter.

Despite all these, Storage vMotion worked.

Reason

It seems to me that ESXi always tries to use VAAI to transmit data. VAAI tries to copy and returns an error. So software data-mover jumps in and copies data through the ESXi storage stack. VMware does not support VAAI to work between arrays – read here. So this should not happen at all. Maybe it is because ESXi is not able to distinguish arrays. In case of these arrays, Vendor- and Model-names are the same.

Problem solution

Solution is quite simple. Just make ESXi clear to use software data-mover instead of VAAI XCopy. This can easily be done by setting ESXi advanced parameter

/DataMover/HardwareAcceleratedMove to 0. This can be done without a reboot and even during Storage vMotion. Keep in mind, this is a host-global setting, so when migration is finished, reset value back to 1. Here you can read more about setting advanced parameter using command line.

When setting changed, not just none of above mentioned symptoms occurs any more, also performance increases! I have seen much more than 50% better throughput.

Notes

- This behavior was observed on 6.0 hosts.

- As mentioned this occurred on HDS arrays. I don’t know if this happens for other vendors too. At least there seems to be a special VAAI Plugin for HDS: VMW_VAAIP_HDS. I will check at next migrations.

- To show supported VAAI primitives per device, use command:

esxcli storage core device vaai status get.

I have same issue with two similar QNAP Arrays. When I disable datamovers (VAAI) traffic between arrays is fast, but inside the same array is very slow and get tons of errors.

My environment is:

VCSA6.7

3xESXi 6.7 DELL PE640 2x10Gbps

2xQNAP TS-1673AU-RP 2x10Gbps

Cisco 10Gbps SWITCH

Somebody has same issue? Any resolution?

Thanks in advance.