How do IO Limits look like [update: SIOC v1, v2]

![How do IO Limits look like [update: SIOC v1, v2]](https://vnote42.net/wp-content/uploads/2018/09/pexels-photo-1645241.jpeg)

In this blog post you can see how to configure IO limit for VM at VMDK level and how ESXi hosts executes these limits. Furthermore you will see differences between SIOC (Storage IO Control) v1 and v2 and how IO size matters.

SIOC v1 vs. v2

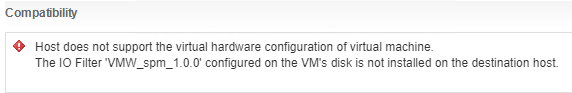

IO limits are a part of SIOC. Starting with ESXi 6.5 SIOC gets version 2. It is now configured by Storage Policies Based Management (SPBM). SIOC v1 and v2 can coexist within a host. But there are limitations when running different host versions. When you try to migrate (online or offline) a VM, configured with limits by policy (SIOC v2) from a 6.5 host to a 6.0 host, you will get the compatibility error:

[Host does not support the virtual hardware configuration of virtual machine.]

The IO Filter 'VMW_spm_1.0.0' configured on the VM's disk is not installed on the destination host.

Therefore when using SIOC v2, every ESXi that should be able to host an IO-limited VM must run at least 6.5.

Depending on how you setup IO limits, v1 or v2 is used. When you configure a limit for a VMDK in VM settings, v1 is used. When assign an storage policy to a VMDK, v2 is used. This is also the way to upgrade to SIOC v2.

How to configure

To configure using SPBM (SIOC v2):

- Go to Policies and Profiles in WebClient

- Click VM Storage Policies

- Click Create VM Storage Policy

- Under 2a Common rules check Use common rules in VM Storage policy

- Click Add component, select Storage I/O Control, select Custom

- At this point you can enter IOPs limit

- Continue rule creation to the end of the wizard

- Select VMs you want to apply this policy, right-click your selection and select VM Policies and Edit VM Storage Policies. Select you policy and press OK. After this all VMDKs get this policy applied. If you want to exclude VMDKs from policy, edit VM settings and set storage policy for VMDK back to Datastore default.

To configure limits by editing VM settings (SIOC v1):

- Right-click VM and click Edit settings

- Expand VMDK to limit and enter Limit – IOPs

- Click OK.

Both ways can be done online, changes gets applied immediately.

How does it work

All the work is done by a component called mClock (v1 and v2), running on ESXi host. To execute IO limits, mClock leverages kernel IO queuing. What is kernel IO queuing? This is something you don’t want to see during normal operations. It happens, when IOs triggert by VMs on a datastore exceed its device queue. When device queue is full, kernel IO queue jumps in to hold these IOs and put them into device queue when possible. For VM IOs this behavior is fully transparent.

Because of this you get a downside of IO limits: higher latency!

During normal IO operations, kernel IO queues should not be used. Here is a screenshot of an IOmeter test in esxtop.

![]()

Short column description:

- DQLEN: device (LUN/volume) queue lenght

- ACTV: current count of IOs in device queue

- QUED: IOs in kernel queue

- CMDS/s: IOs per second

- DAVG/cmd: storage device latency

- KAVG/cmd: kernel latency

- GADG/cmd: guest (VM) latency

- QAVG/cmd: kernel queue latency

As you can see, no kernel queue is used, therefore device latency is the same as guest latency.

An example when kernel queue is used is when a VM uses a larger IO queue in virtual disk controller than DQLEN and uses this queue excessively . Here an example of such situation. Disk controller in VM has a configured length or size of 256. I used IOmeter to run a test using 100 outstanding IOs:

![]()

You can see, QUED, KAVG/cmd and QAVG/cmd are > 0. You can also see ACTV + QUED ~ 100 which is the used queue length in VM. When you have no hardware problems in your SAN (cable, SFP), QAVG/cmd should be the same as KAVG/cmd.

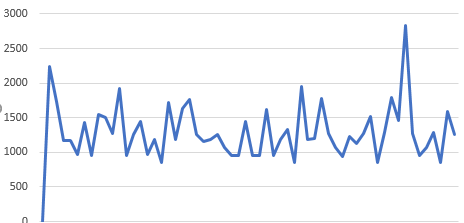

Next test-settings:

- IO limit for VMDK is set to 2000

- IO-size in IOmeter is set to 32kb

- Outstanding IOs: 16.

![]()

You can see:

- ACTV = 0 –> device queue is not used

- QUED = outstanding IOs –> kernel IO queuing

- CMDS/s ~ IO limit set

- KAVG/cmd > 0 –> kernel IO queuing

- GAVG/cmd = DAVG/cmd + KAVG/cmd

Conclusio: kernel queue is used to handle the amount of IOs put to device queue to slowdown IO processing. Because IOs have to wait in kernel queue, latency is increased.

IO Size matters in SIOC v1

You define IO limit using an absolute value. But mClock does not count every IO as one IO. The size of an IO matters! By default mClock “normalizes” IOs to a multiple of 32KB. By default, because this can be adjusted using advanced host setting SchedCostUnit. Value range from 4KB to 256KB, set in byte. Setting can be changed online and takes immediately effect.

Show current setting:esxcli system settings advanced list -o /Disk/SchedCostUnit

Set value of 64kb:esxcli system settings advanced set -o /Disk/SchedCostUnit -i 65536

Actual IO limit in a mathematical notation:

$latex counted \ IOPS = \frac{limit}{\lceil\frac{IO-size}{32}\rceil}$

$latex \lceil \ \rceil$ means to round up to next integer ($latex \lceil 1.01\rceil = 2$). So an IO-size in range of 1-32KB count as 1, 33-64KB count as 2, …

To show this behavior, I used the same test as before. Only differnce: 33KB instead of 32KB IO size:

![]()

IOs cut in half.

Next 65KB IO size:

![]()

…only one third.

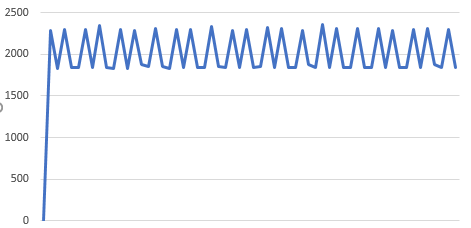

IO Size does not matter in SIOC v2

SIOC v2 does not look at IO size, every IO counts as 1. According to my tests, SIOC v2 seems to perform more steady. Here are two screenshots showing a set limit of 2000 and steady IOs of 64KB.

First: v1. 64KB means one IO is count as 2, therefore actual limit is 1000 IOps.

Second: v2. Because size does not matter, actual limit is 2000 IOps.

Notes

- Despite you configure a Storage IO Control (SIOC) policy when using SPBM, SIOC does not have to be enabled on datastore. See Storage IO Control Technical Overview for info about SIOC

- When storage policy is applied at a VM that already uses manually set IO limit, lower limit wins and is executed.

- When using SPBM, IO Limits are not shown when edit VM settings. There you can only see applied policy name.

- When storage policy gets detached, disk IO Limits are reset to Unlimited.

“Despite you configure a Storage IO Control (SIOC) policy when using SPBM, SIOC must not be enabled on datastore.”

I guess that’s wrong. You can have SIOC enabeld or disabled on the datastore.

Hello crandler! Thanks for your feedback! Sorry for my late response! Your are absolutely right, to use IO limits, SIOC can be enabled or disabled. I tested again, v1 and v2 works this way. The error was my translation as non-native English-speaker.