Does ESXi host survive persistent boot device loss?

In this post I investigate what happens when a VMware vSphere ESXi host loses its boot device. This device is meant to be a persistent device. For non-persistent devices like USB- and SD-card, behavior is quite clear: whole ESXi OS runs in memory, no mass-write operations should be directed to the device. When it breaks, ESXi isn’t missing it and keeps running.

With a persistent device I was convinced that ESXi would die when it broke. BUT: ESXi survives. Not such a clean behavior like with non-persistent devices, but it survived.

Reason for testing

There is a concrete reason for this testing. I want to answer the question, if it is safe to boot a ESXi host from a single disk. No Raid, just a single disk connected to a HBA. This would be an additional option for ESXi boot device. Why? Because VMware and other server vendors do not recommend to use non-persistent boot devices any more. And there are more reasons for this:

- Changed disk partition layout in vSphere 7

- Changed boot device requirements in vSphere 7

- Bug in vSphere 7 U2

So USB devices and SD cards tend to fail within rather short time after installing or upgrading to vSphere 7. Therefore other persistent and durable devices are needed. This post answers the question if a single disk is an option: yes it is!

Positive test results

- To exclude a temporary phenomenon, I kept the host running for more than 10 days with a broken boot device.

- vMotion was available at any time.

- vSAN Cluster continued to function and kept using physical disks of this host.

- Backing up VMs using NBD aka. Appliance Mode worked without problems.

- I was able to start and stop services (like SSH).

How does is look like

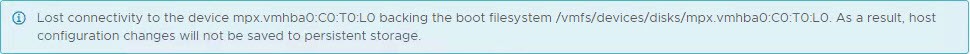

It is quite unspectacular when the disk is broken. Everything continues to work. There is a warning in vCenter about the lost connectivity.

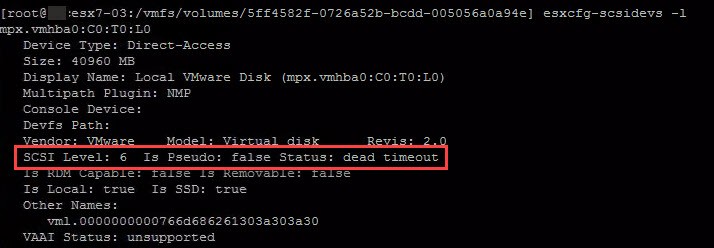

Logs are not written any further. So latest entries show approximately the time of failure. This happens because locations for logs are not available any more. See dead status of device when investigating with esxcfg-scsidevs.

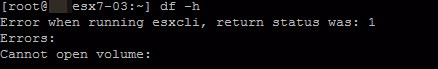

And what is show when looking at mount points usage with command df? Errors:

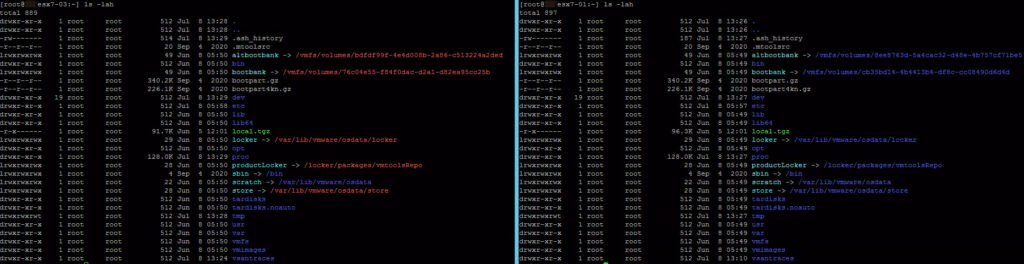

A look into the root directory does not look good either. On the left side disk is broken, on the right side no failure was introduced.

Keep in mind

- Booting a host with a single disk is still not a recommended option. As you can see, it is working but not appropriate for every environment.

- A spinning hard disk has a higher probability of failure than a SSD. Therefore I would highly recommend to use a SSD in such a configuration.

- If you implement such a solution without redundancy, I recommend to schedule a ESXi backup script. With it you can easily restore configuration when device breaks. You can use PowerCLI command

Get-VMHostFirmwarewith parameterBackupConfigurationto do so.

Note: If the boot device has already failed, backup can no longer be made! - After device is gone, you are not able to change advanced settings any more. So it is not possible to redirect logging to another disk or an external log server.

Conclusion

When it comes to vSphere 7, boot device like USB drives and SD cards are to be avoided. In this post I have shown that it is safe to use single disks to boot if you consider a few points. My order of recommended boot devices is therefore:

- Raid 1 of SSDs (preferred) or HDDs.

- Server vendor’s recommended boot device, like HPE’s OS Boot Devices.

- Single disk (SSD preferred) as shown here.

Notes

- Tested Version of ESXi host was 7 U1.

- I tested in a fully virtualized environment. But because the boot device for the ESXi-VM is represented as a VMDK, it is comparable to a disk in a physical server.

- When troubleshooting SAN storage, my post about Permanent Device Loss could help.