Comparison of vSphere Transport Modes

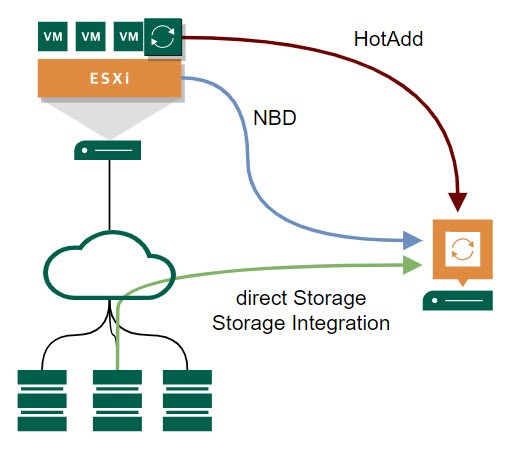

Each time I talk to customers at installation dates or health-checks, I spend some time talking about vSphere transport modes. These are: Direct Storage Access, Storage Integration (I take this as a separate mode), Virtual Appliance and Network mode. This is a topic with a lot of facets still not widely known. This comparison of vSphere transport modes is based on a few characteristics.

I give some detailed information for Veeam Backup & Replication as backup solution. But most of the information here can be used for other solutions as well.

Direct Storage Access

- Security

- Honestly rather bad. Since production volumes are presented to a Veeam proxy host, a local admin/root can easily delete all volumes within seconds.

- Much better if the storage system is able to present volumes in read-only mode.

- Network

- As the name suggests, backup traffic is kept in storage network.

- Configuration Effort

- It is more complex to configure than other modes. This is also because you need to configure different layers like storage switches and arrays. You may have to configure the array with each new volumes for backup too.

- It could be more tricky to provide Direct Storage Restore.

- Scaling

- Scaling is simple here. Host should be well connected to the storage network. So during a backup host could transfer data as fast as storage or network can provide.

- When your (hopefully) physical proxy has enough resources, it will provide very high performance.

- Performance

- This mode is often the fastest in terms of bandwidth. Also for backup or restore of a single VMDK. Veeam does not limit the speed, so it is delimited by the array the network and the repository.

- Direct Storage Restore is only fast, when VMDKs are eager-zeroed. With Veeam you can set this type in restore wizard.

- Possible Pitfalls

- There are at lest 3 possible problems with Direct Storage Restore

- Be sure to test Direct Storage Restore before you need it.

- You will see some warnings in vCenter, saying another workload was recognized. This can be ignored.

Storage Integration

- Security

- Good, compared to Direct Storage Access. This is because no volume has to be presented to a Veeam proxy host. Just storage snapshots will be exported at runtime of the backup job.

- Keep in mind, credentials for administrator user of the array is stored in VBR database. This information is rather simple to export from database with necessary permissions. One reason more to keep your server safe like Fort Knox.

- Network

- Like Direct Storage Access.

- Configuration Effort

- Easier than Direct Storage Access, because storage volumes does not need to be exported. But backup proxy needs an access path to the array. So storage network switches has to be configured.

- Scaling

- Like Direct Storage Access.

- Performance

- See Direct Storage Access.

- The time it need to start the backup stream is much longer than in other modes. This is because creating the hardware snapshot and presenting it takes some time.

- Possible Pitfalls

- By default, Veeam uses storage integration if the array was added. If no proxy has access to the volumes, backup will fail. So enable failback to normal backup (without integration) in advanced settings.

- Without presentation of volumes in read-write mode, another transport mode will be used for restore. This is because, Storage Integration works only for backup, not for restore. For restore, Direct Storage Restore will be used.

- When using this mode, consider to keep VMs of a single backup job on a rather small number of storage volumes. Otherwise you end up in a lot of storage snapshots for a single job. Also avoid simultaneously running backups of VMs on the same storage volume.

Virtual Appliance (HotAdd Mode)

- Security

- Is quite okay. But there are still a few vSphere permissions you need for backup jobs like “remove disks from a VM”.

- Network

- Network selection is flexible here. Traffic is going from virtual proxy to repository server.

- Make sure selected network is able to provide the performance you need.

- Configuration Effort

- To add a new virtual proxy – more or less – just a new Windows/Linux VM needs to be installed. In VBR console it is easy to add a new proxy server.

- These VMs needs to be maintained. This administrative overhead should not be underestimated!

- Scaling

- To scale, just increase the number of virtual proxies.

- A single proxy reaches rather soon its network bandwidth.

- Performance

- Performance with a single proxy is most often delimited by proxies network bandwidth.

- Similar performance for backup and restore.

- Possible Pitfalls

- In larger and/or stretched environments, automatic selection of proxies could lead to unwanted traffic flows. Use manual selection instead.

- Always test backup and restore performance of a single VMDK

- Avoid firewalls in between.

Network (NBD mode)

- Security

- Is good with this mode. In a very high secure environment you can delimit vSphere permissions for backup that way, a hacker has not that much options to destroy things. For restore some more permissions are needed, of course! Veeam does a good job in documenting required permissions.

- Network

- Traffic is going through the management link of the ESXi host.

- vSphere 7 provides the option to isolate backup traffic.

- Configuration Effort

- If management link and necessary ports of ESXi host are reachable by a Veeam proxy, configuration is done.

- Scaling

- Because of ESXi limitations, scaling works only with the number of ESXi hosts.

- Performance

- Traffic bandwidth is delimited by ESXi. For production environment, 10Gbps should be minimum. So you can achieve more than 160MB/sec backup bandwidth. Expect less bandwidth for restore operations.

- In terms of bandwidth, NBD is the slowest mode.

- The overhead for vSphere is minimal before starting the backup stream. So this mode could be faster in terms of needed time if you backup a bunch of small VMs.

- Veeam v11 is able to increase NBD streams.

- Possible Pitfalls

- Currently, backup traffic isolation does not work in routed networks.

- Low performance for single VMDK backup and restore.

Conclusion

It is no trivial task to choose the right transport mode for a particular workload. As you can see there is a whole range of aspects to consider. When you are looking for the best performance you will go with Direct Storage Access or HotAdd Mode. If security is your concern, Network mode will be your choice. IMHO a well sized and designed HotAdd environment will fit for the most requirements.

Notes

- Get your trial of Veeam Backup&Replication!

- Detailed information of transport modes, you can find in VMware’s Virtual Disk Development Kit Programming Guide.

- When your direct storage backup is slow, maybe my post about Poor SAN transport mode performance helps

I think you got some DirectSAN points in wrong category (snapshot related stuff), DirectSAN does not do storage snapshots.

Thanks Mihkel for your feedback! Your are right, there are no storage snapshots with Direct Storage Access. Points were intended for Storage Integration. I have corrected that.